MIPS64 + SIMD Multi-CPU

Multi-core, multi-threaded, multi-cluster MIPS™ CPUs

Driving Inferencing to the Edge

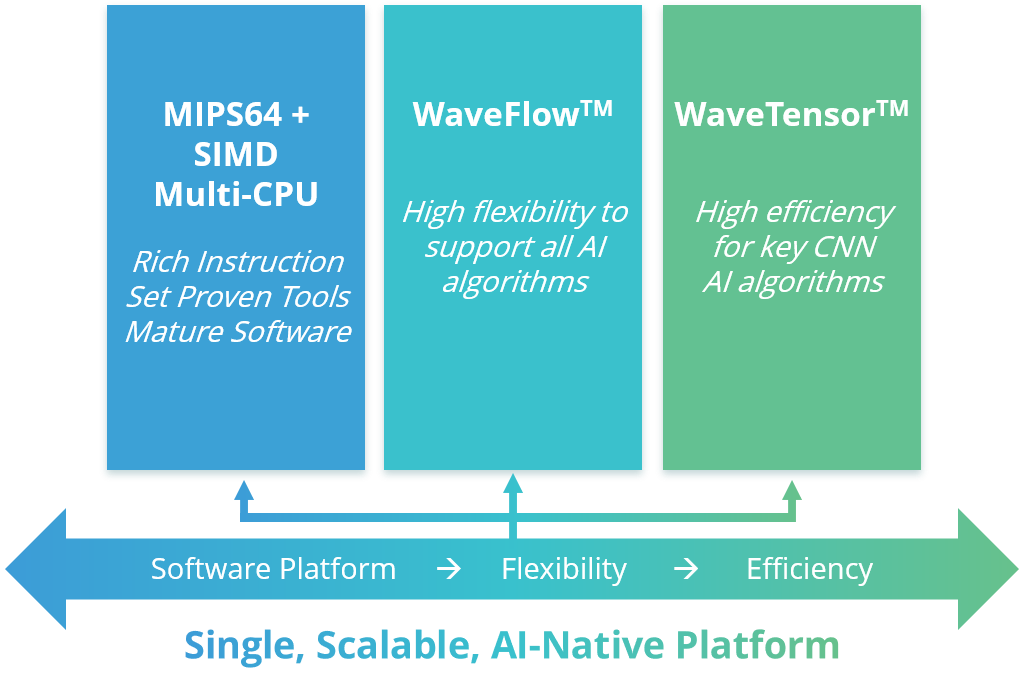

Wave Computing’s customizable, AI-enabled platform merges a triad of powerful technologies to efficiently address use case requirements for inferencing at the edge.

MIPS64 + SIMD Multi-CPU

Multi-core, multi-threaded, multi-cluster MIPS™ CPUs

WaveFlow Technology

Patented, scalable dataflow platform designed to efficiently execute existing and future algorithms

WaveTensor Technology

Highly-efficient, configurable, multi-dimensional TensorCore processing engines

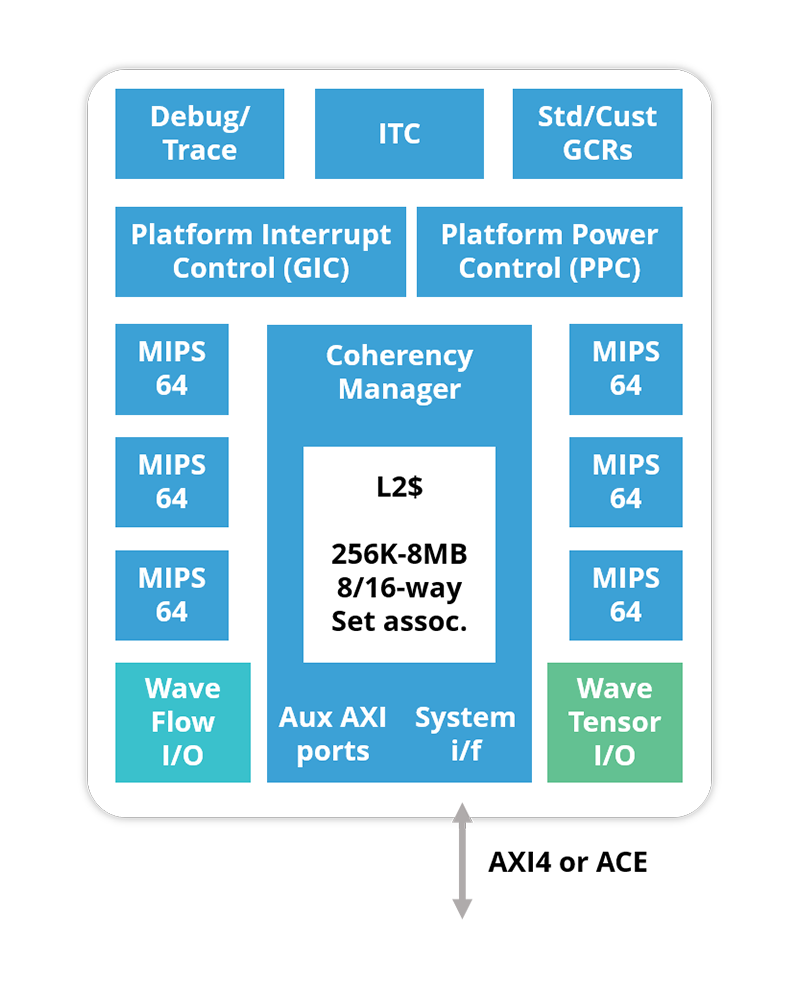

MIPS64r6 ISA:

Multi-Processor Cluster:

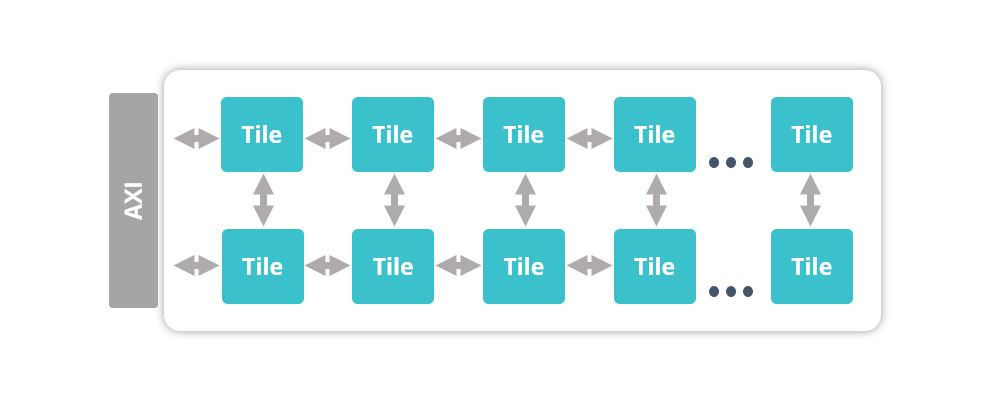

WaveFlow Reconfigurable Architecture

WaveTensor Reconfigurable Architecture

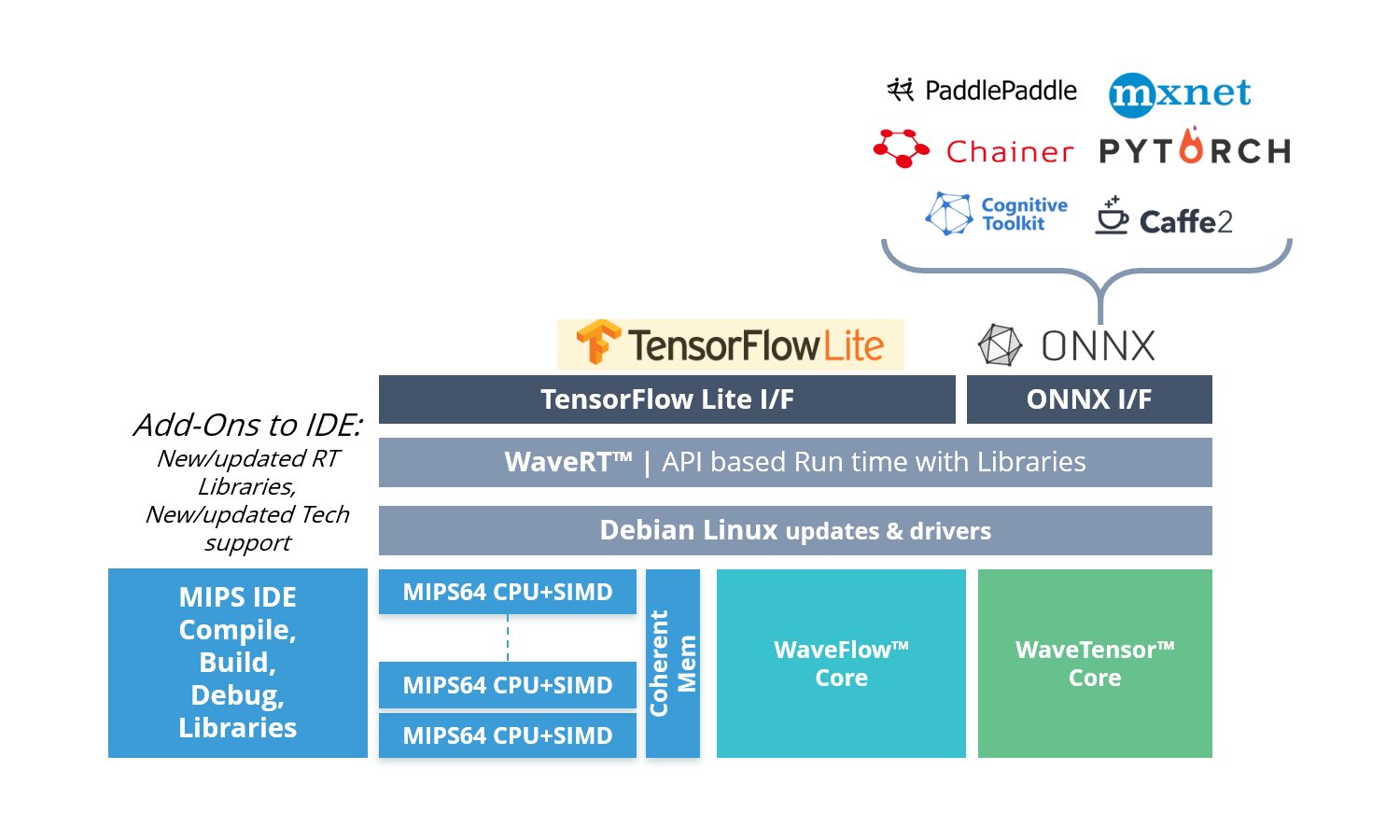

TritonAI Application Programming Kit (APK)

The TritonAI 64 platform provides the inferencing power needed for today’s AI edge applications, with the flexibility and scalability to address the rapidly evolving needs of the innovative AI industry.

By combining Wave’s unique, patented WaveFlow processor technology with its proven, efficient, MIPS core designs, Wave is powering the next generation of AI, with a single platform that scales from the datacenter to the edge.